Getting started with the AWS Multi-Agent Orchestrator Framework

In this article, I explore the AWS Multi-Agent Orchestrator Framework using Python. We get to build multiple agents using different approaches provided by the framework to see the capability of the framework and use cases. It promises to be an exciting ride!

Prerequisites

- AWS account

- AWS CLI setup locally

- Python3

- Model access is enabled for the required models.

What would we cover

- We cover what Amazon Multi-Agent Orchestrator is.

- We walk through the multiple approaches to building an Agent.

- We perform some tests to see it in action.

- Some observations

Introduction

This article will utilize the AWS Multi-agent to build Multi-Agents. The goal is to get an introduction to the framework by seeing what is possible and trying out some use cases.

Let's get started!

What is the AWS Multi-Agent Orchestrator?

The AWS Multi-Agent Orchestrator is an open-source framework for building AI agents and multi-agents. It has inbuilt features such as intelligent routing, intent classification, context management, agent overlap analysis, and many more.

The Multi-Agent Orchestrator uses a Classifier, that does the selection of the right agent to be used for every user request.

Main Components of the Orchestrator

The main components are:

Orchestrator:

The central coordinator for all other components and handles the flow of information between all other components such as Classifiers, Agents, Storage, and Retrievers.

Handles error scenarios internally and has a great fallback mechanism.

Classifier

Examines user input alongside the previous chat history and agent description to identify the most appropriate agent for each request. Custom classifiers could also be used.

Agents

From Prebuilt Agents to custom agents, this is where you create and tailor functionality for agents. You could also extend prebuilt agents as we use later in the article.

Conversation Storage

Used to save conversation history. Has in-built memory and DynamoDb support and can also use custom storage solutions.

Retrievers

Used to enhance the agent's performance by providing context and relevant information. We could attach a Knowledge Base here to augment the agent data by providing more context.

Project Setup

Before we start creating the agents, we need to set up our environment. head over to the terminal and run the following:

mkdir aws_orchestrator

cd aws_orchestrator && touch app.py

python -m venv venv

source venv/bin/activate This would set up a virtual environment for the Python app.

Next, install the multi-agent-orchestrator package:

pip install "multi-agent-orchestrator[all]"Using the Inbuilt Bedrock Agent with classifier approach

First, we explore how to build an inline agent using the inbuilt Bedrock agent. We would customize it to support our use case.

Head over to app.py and input the following:

import uuid

import asyncio

import sys

from multi_agent_orchestrator.orchestrator import MultiAgentOrchestrator, OrchestratorConfig

from multi_agent_orchestrator.agents import (BedrockLLMAgent,

BedrockLLMAgentOptions,

AgentResponse,

AgentCallbacks)

orchestrator = MultiAgentOrchestrator(options=OrchestratorConfig(

LOG_AGENT_CHAT=True,

LOG_CLASSIFIER_CHAT=True,

LOG_CLASSIFIER_RAW_OUTPUT=True,

LOG_CLASSIFIER_OUTPUT=True,

LOG_EXECUTION_TIMES=True,

MAX_RETRIES=3,

MAX_MESSAGE_PAIRS_PER_AGENT=10,

USE_DEFAULT_AGENT_IF_NONE_IDENTIFIED=True

))

class BedrockLLMAgentCallbacks(AgentCallbacks):

def on_llm_new_token(self, token: str) -> None:

print(token, end='', flush=True)

football_agent = BedrockLLMAgent(BedrockLLMAgentOptions(

name="Football Insights Agent",

description="Expert in football analysis, covering team strategies, player stats, match predictions, and historical comparisons.",

callbacks=BedrockLLMAgentCallbacks()

))

orchestrator.add_agent(football_agent)

life_hack_agent = BedrockLLMAgent(BedrockLLMAgentOptions(

name="Life Hacks & Motivation Agent",

description="Provides life hacks for efficiency, productivity tips, motivational insights, and goal-setting strategies for self-improvement.",

callbacks=BedrockLLMAgentCallbacks()

))

orchestrator.add_agent(life_hack_agent)

async def handle_request(_orchestrator: MultiAgentOrchestrator, _user_input: str, _user_id: str, _session_id: str):

response: AgentResponse = await _orchestrator.route_request(_user_input, _user_id, _session_id)

print("\nMetadata:")

print(f"Selected Agent: {response.metadata.agent_name}")

if response.streaming:

print('Response:', response.output.content[0]['text'])

else:

print('Response:', response.output.content[0]['text'])

if __name__ == "__main__":

USER_ID = "user123"

SESSION_ID = str(uuid.uuid4())

print("Welcome to the interactive Multi-Agent system. Type 'quit' to exit.")

while True:

# Get user input

user_input = input("\nYou: ").strip()

if user_input.lower() == 'quit':

print("Exiting the program. Goodbye!")

sys.exit()

# Run the async function

asyncio.run(handle_request(orchestrator, user_input, USER_ID, SESSION_ID))This creates 2 agents. A football agent and second a life hack agent.

Run the app: python3 app.py

Input Prompt 1:

Who is cristiano ronaldo

Resulting output:

As can be seen, the Classifier routed the request to the right agent.

Input Prompt 2:

I need motivation to succeed in goals

Resulting output:

The Classifier also correctly routed the request to the right agent.

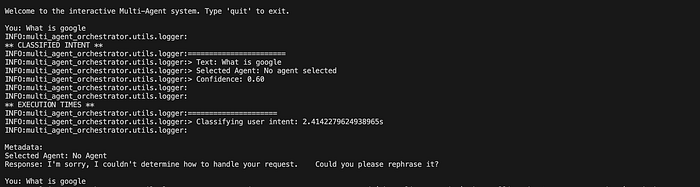

Adding a Default Agent

As can be seen, we have no Default agent and hence when we provide an input with no agent coverage it doesn't give a graceful response.

Input Prompt:

I need motivation to succeed in goals

Resulting output:

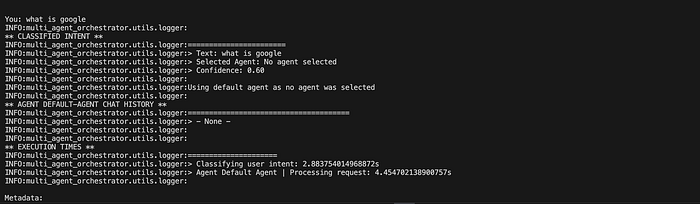

Let's fix this by updating the code to include a Default agent. Update the orchestrator in app.py

orchestrator = MultiAgentOrchestrator(options=OrchestratorConfig(

LOG_AGENT_CHAT=True,

LOG_CLASSIFIER_CHAT=True,

LOG_CLASSIFIER_RAW_OUTPUT=True,

LOG_CLASSIFIER_OUTPUT=True,

LOG_EXECUTION_TIMES=True,

MAX_RETRIES=3,

MAX_MESSAGE_PAIRS_PER_AGENT=10,

USE_DEFAULT_AGENT_IF_NONE_IDENTIFIED=True

),

default_agent=BedrockLLMAgent(BedrockLLMAgentOptions(

name="Default Agent",

streaming=False,

description="This is the default agent that handles general queries and tasks.",

))

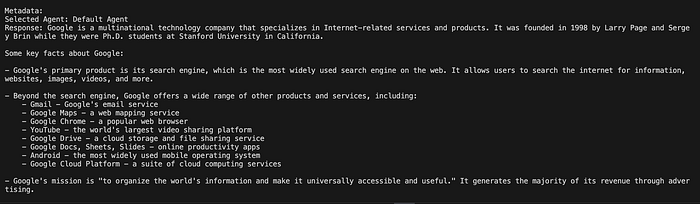

)Rerun the app and enter the same user prompt. This time it uses the default agent to attempt the question:

Chaining Agents

Suppose we want an ordered flow of agent collaboration, rather than the Classifier choosing the single appropriate agent.

We want some agents to work together sequentially to give an output. This is where ChainAgent comes in.

It does this by using the output of one agent as input for the next. This creates an agent chain

Create a new file called chained.py and input the following:

from multi_agent_orchestrator.agents import (BedrockLLMAgent,

BedrockLLMAgentOptions,

AgentCallbacks, ChainAgent, ChainAgentOptions)

class BedrockLLMAgentCallbacks(AgentCallbacks):

def on_llm_new_token(self, token: str) -> None:

print(token, end='', flush=True)

research_agent = BedrockLLMAgent(BedrockLLMAgentOptions(

name="Research Agent",

description="Analyzes and validates the given content, expanding on relevant topics and ensuring accuracy.",

callbacks=BedrockLLMAgentCallbacks(),

streaming=True

))

analysis_agent = BedrockLLMAgent(BedrockLLMAgentOptions(

name="Analysis Agent",

description="Extracts key insights, trends, and relevant points from the content, identifying core themes.",

callbacks=BedrockLLMAgentCallbacks(),

streaming=True

))

report_agent = BedrockLLMAgent(BedrockLLMAgentOptions(

name="Report Agent",

description="Creates a structured report summarizing the research findings and key insights into a coherent format.",

callbacks=BedrockLLMAgentCallbacks(),

streaming=True

))

options = ChainAgentOptions(

name='ChainAnalysisAgent',

description='A research, analayis and report generation chain of agents that takes user research input and gives a final report after proper research & analysis',

agents=[research_agent, analysis_agent, report_agent],

default_output='The chain processing encountered an issue.',

save_chat=True

)

chain_agent = ChainAgent(options)We have 3 agents chained together. The chained agent retrieves content on any topic from the user and carries out research & analysis and returns a final report.

Using Chained Agent with Orchestrator

Let's put this chained agent to the test. Modify the app.py file to import and add this new agent to the orchestrator:

from chained import chain_agent

# previous code

#Add Chained agent

orchestrator.add_agent(chain_agent)

# previous codeNow rerun the app.py file:

Input Prompt:

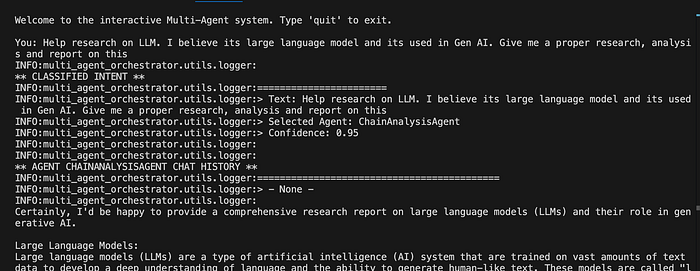

Help research on LLM. I believe its large language model and its used in Gen AI. Give me a proper research, analysis and report on this

Resulting output:

It is carrying out the needed analysis to produce the report. As can be seen, it uses the information from the research agent to generate insights and reports.

Using supervisor agent

The SupervisorAgent is an advanced orchestration component that enables sophisticated multi-agent coordination. It uses a unique agent-as-tools architecture that exposes team members to a supervisor agent as invocable tools.

Rather than sequentially, it enables parallel processing, allowing the lead agent to switch context between multiple team agents as it seems fit.

This means it could call Agent 1 first and then Agent 2 for one input, while also calling Agent 2 first and then Agent 1 for another input.

It can be used directly or with the classifier. In the example below, we use it with the orchestrator, just like we did with the chained agent.

Create a new file called surpervisor.py and input the following:

from multi_agent_orchestrator.agents import (BedrockLLMAgent,

BedrockLLMAgentOptions,

AgentCallbacks,

SupervisorAgent,

SupervisorAgentOptions)

class BedrockLLMAgentCallbacks(AgentCallbacks):

def on_llm_new_token(self, token: str) -> None:

print(token, end='', flush=True)

ticket_agent = BedrockLLMAgent(BedrockLLMAgentOptions(

name="Ticket Agent",

description="Creates a ticket about customer issues",

callbacks=BedrockLLMAgentCallbacks(),

streaming=True

))

credit_card_agent = BedrockLLMAgent(BedrockLLMAgentOptions(

name="Credit Card Agent",

description="Handles card issues, asks for customer card details when needed",

callbacks=BedrockLLMAgentCallbacks(),

streaming=True

))

supervisor_agent = SupervisorAgent(SupervisorAgentOptions(

lead_agent=BedrockLLMAgent(BedrockLLMAgentOptions(

name="Support Team Lead",

description="Coordinates support inquiries"

)),

team=[credit_card_agent, ticket_agent]

))We have 3 agents; 2 team members and a lead agent. The lead agent determines which agent it needs to call, or if it needs to use both team agents for a single user request.

Using Supervisor Agent with Orchestrator

Let’s put this Supervisor agent to the test. Modify the app.py file to import and add this new agent to the orchestrator:

from supervisor import supervisor_agent

.....

#Add Supervisor agent

orchestrator.add_agent(supervisor_agent)Now rerun the app.py file:

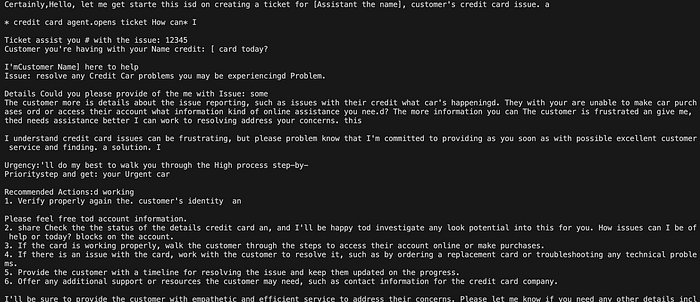

Input Prompt:

My credit card has issues, create a ticket for me, and I need help resolving it

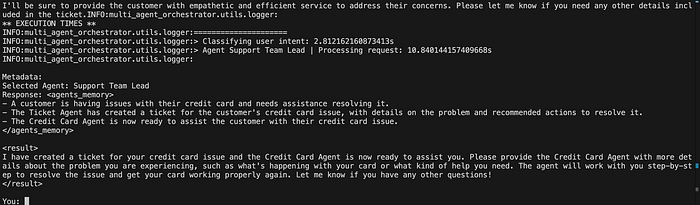

Resulting output:

The lead supervisor agent was able to switch between its team agents based on the request of the user. It combined both agents to give a useful response.

My Observation

- I haven't seen any USP(Unique Selling Point) with this framework from CrewAI. What comes to my mind would be the ease of integration with other AWS services since it is an AWS framework

- A good initiative for building agents quickly, looking to try out other of its components like custom storage, using existing AWS services like lambda, Bedrock agent, and others.

Closing

This has been an interesting ride. We not only got to build an AI Agent using the AWS orchestrator, but we also got to try out its multiple features/components to build AI agents.

You can find the complete source code here.

Excited to see what you build!